Retrieval-Augmented Generation (RAG) has fundamentally transformed our approach to handling vast datasets. Essentially, within RAG, the retrieval mechanism involves accessing pertinent external data to amplify the generation of responses by large language models (LLMs).

By seamlessly integrating external sources, these models can generate responses that surpass mere accuracy and detail, they become contextually enriched, particularly when addressing queries that demand precise or up-to-date knowledge.

In this guide, Hexon Global’s engineer Hemant Kumar explores the different search methods in RAG: Keyword Search, Vector or Semantic Search, and Hybrid Search.

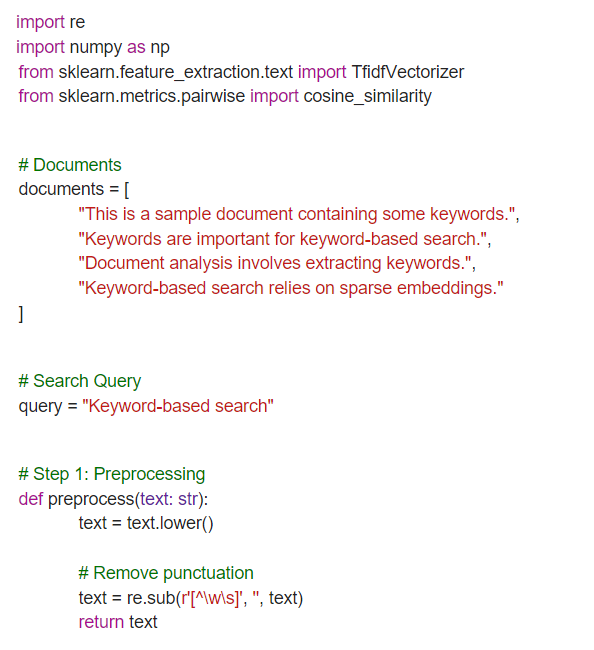

Keyword Search

Keyword search, which scans documents for the exact words or phrases in the query. This method relies on generating sparse embeddings, where most values in the vector are zero except for a select few non-zero values. These sparse embeddings are created using algorithms like BM25 and the traditional TF-IDF (Term Frequency-Inverse Document Frequency).

Keyword Search Process

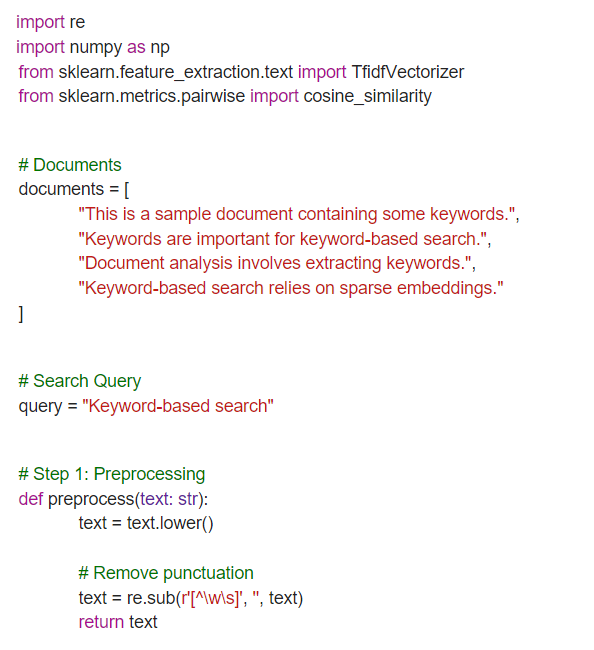

Preprocessing

Before searching, both the query and documents undergo preprocessing to extract essential keywords and eliminate irrelevant noise.

Sparse embedding creation

For Each document and search query, a sparse embedding is generated based on the frequency of keywords within that document relative to their occurrence. Embedding are generated by algorithms like TF-IDF and BM25.

Similarity calculation

The relevance of a document to the query is assessed by measuring the similarity between the query embedding and the embeddings of the documents, typically using cosine similarity or a similar metric.

Ranking

Based on their similarity scores the documents are ranked, so the most relevant ones show up first in the search results.

Limitations of Keyword Search

Exact matching

If search query is “cats”, it will not match the documents which contains the word “cat”.

Ambiguity

Words like “apple” can be ambiguous. For instance, if the query is only “apple,” it’s unclear if it refers to the fruit, the tech company, or something else. Traditional search engines struggle to understand such context from words alone.

Synonyms

Certain words may vary in spelling but denote the same concept, such as “car” and “automobile” while different in wording they represent the same idea.

Misspelling

In keyword search, spelling accuracy is crucial. If a word is misspelled, it can prevent users from finding the results they’re looking for.

Keyword Search Code Example

Vector or Semantic Search

Semantic search uses the modern machine learning algorithms like BERT (Bidirectional Encoder Representations from Transformers) to generate dense embeddings. These embedding encapsulate rich semantic information and are typically characterized by non-zero values.

Semantic search offers the flexibility of conducting multi-lingual and multi-modal searches, it is resilience to typos, it can miss essential keywords. The effectiveness of semantic search heavily relies on the quality of the generated vector embeddings.

Semantic Search Process

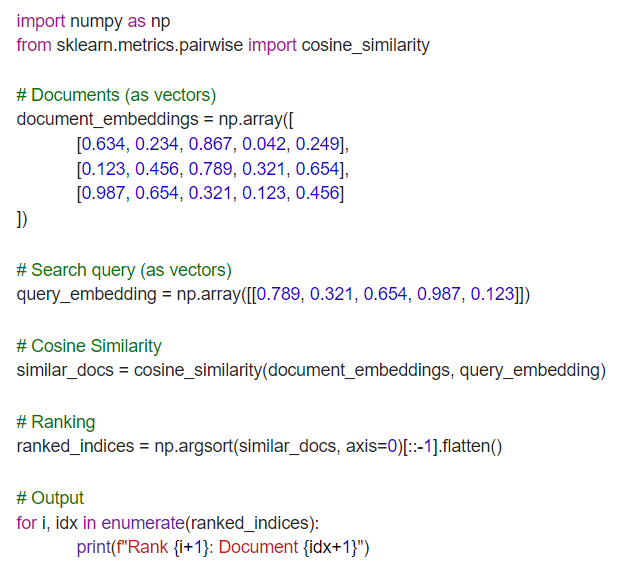

Embedding

To ensure consistency in semantic representation, a dense embedding is generated for each document and search query using the same embedding model, such as BERT. This approach ensures that both the document and the search query are encoded into dense vectors with identical semantic specifications. These models effectively capture the semantic meaning of text, encoding it into dense vectors for accurate retrieval.

Similarity calculation

After generating the embeddings for the query and documents, we use a metric like cosine similarity to measure the similarity between the search query embedding and document embeddings. It measures the angle between two vectors and provides a similarity ranging from -1 to 1. If the value is closer to 1, it means they are very similar.

Ranking

Based on their similarity scores, the documents are ranked, with those having higher similarity scores appearing first in the search results.

Semantic Search Code Example

Hybrid Search

Both keyword search and semantic search have their limitations. Keyword search may fail to capture relevant information expressed differently, while semantic search can sometimes struggle with providing specific results or overlook important statistical word patterns.

In hybrid search, we combine the strengths of both keyword and vector search techniques to achieve the best possible results. By combining these approaches, we can capitalize on the precision of keyword search in capturing explicit terms and the contextual understanding offered by semantic search.

Hybrid Search Process

Scoring

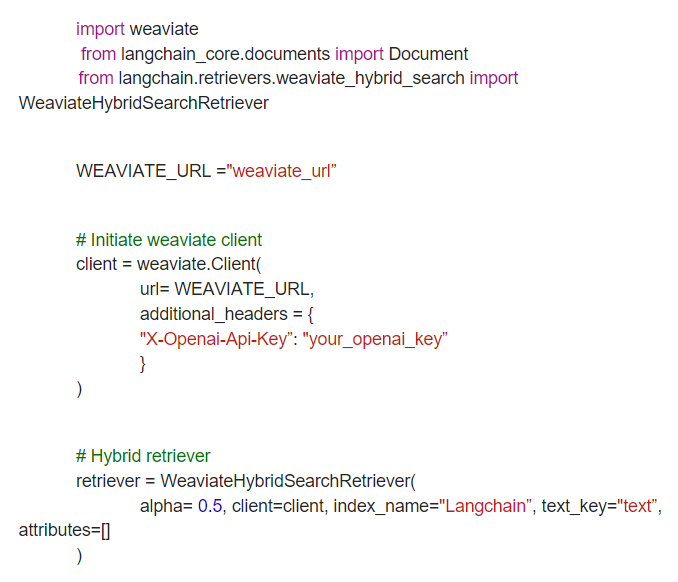

Both keyword and semantic search results are evaluated using metrics like cosine distance or positional rank within the results list. Each result is assigned a numerical score indicating its relevance to the search query.

Weighted Combination

The scores from both the keyword search and the standalone semantic search are combined using the alpha parameter.

The hybrid_score is determined by a formula:

hybrid_score = (1 – alpha) * sparse_score + alpha * dense_score

hybrid_score is the score obtained from the hybrid search.

alpha determines the weight assigned to each score, with values typically ranging between 0 and 1. 1 is pure semantic search and 0 is pure keyword search.

Re-ranking

The combined scores are used to rank the search results. Those with higher hybrid scores are given precedence in the final ranking, highlighting their increased relevance to the search query.

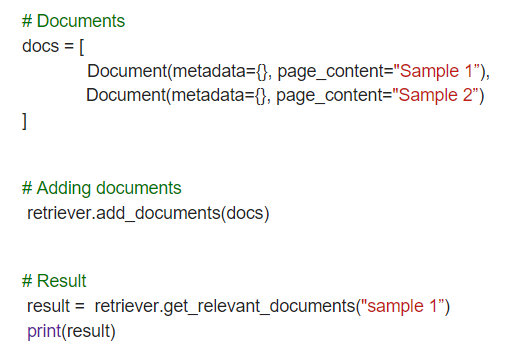

Hybrid Search Code Example

We will use a dedicated hybrid search component of Langchain:

Conclusion

By integrating outcomes from different search algorithms and refining the rankings, hybrid search offers a comprehensive approach to information retrieval.

Contact Us

Get in touch

Understand how we help our clients in various situations and how we can be of service to you!