What Is vLLM?

vLLM, an open-source project, has revolutionized the landscape of LLM model deployment. It provides a unified interface for deploying and serving LLM models, abstracting away complexities and enabling organizations to harness the power of these models for their applications. vLLM is a fast and easy-to-use library for LLM inference and serving. Here’s why it stands out:

- State-of-the-Art Serving Throughput:

- vLLM achieves remarkable performance, outperforming mainstream frameworks.

- It ensures efficient management of attention key and value memory using a novel mechanism called PagedAttention.

- Continuous batching of incoming requests further enhances throughput.

- Fast Model Execution with CUDA/HIP Graph:

- vLLM leverages GPU acceleration for faster inference.

- Optimized CUDA kernels contribute to its speed.

- Quantization and KV Cache:

- vLLM supports quantization techniques like GPTQ, AWQ and SqueezeLLM.

- Its KV Cache optimization improves memory efficiency.

- Flexible and Easy to Use:

- Seamlessly integrates with popular Hugging Face models.

- Supports various decoding algorithms, including parallel sampling and beam search.

- Tensor parallelism enables distributed inference.

- OpenAI-Compatible API Server:

- vLLM serves as an efficient API server for LLMs.

- It supports both NVIDIA GPUs and AMD GPUs (experimental).

Requirements

- OS: Linux

- Python: 3.8 – 3.11

- GPU: compute capability 7.0 or higher (e.g., V100, T4, RTX20xx, A100, L4, H100, etc.)

Setting Up vLLM on a EC2 AMI

Here is how you can set-up vLLM:

- Launch an EC2 instance

- Launch the Nvidia Driver GPU AMI

- Choose an instance type with NVIDIA GPUs (e.g., P3, P4d, G4dn). Select the Nvidia Driver GPU AMI from the aws Marketplace.

- SSH into the instance.

- Verify that the NVIDIA driver and Docker are installed.

- Prepare Your Environment (Optional)

- Launch the Nvidia Driver GPU AMI

- Although optional, creating a dedicated conda environment is recommended for better management of dependencies and packages.

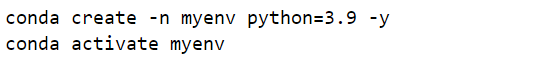

- Execute:

- Install vLLM

- Ensure your system is equipped with CUDA 12.1 to leverage GPU acceleration, enhancing vLLM’s performance.

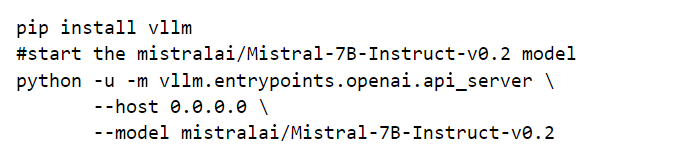

- Install vLLM:

Setting Up vLLM on Docker

- Docker Containers for Consistency

- Docker containers guarantee consistent behavior across various contexts. By encapsulating LLMs within containers, you ensure reproducibility and eliminate the age-old “it works on my machine” issue.

- When deploying LLMs using Docker, you can package the model, dependencies, and runtime environment together, simplifying deployment and maintenance.

- Docker and LLMs

- Docker is essential for running LLM inference images. It provides robust containerization technology that streamlines packaging, distribution, and operation.

- When deploying LLMs, consider creating a Docker image that includes the LLM model, any necessary libraries, and the inference code. This image can then be deployed on various platforms, including cloud services

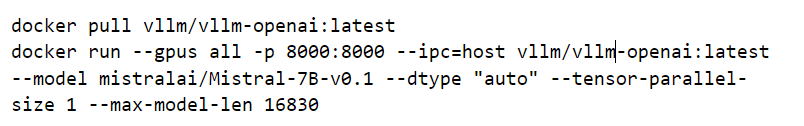

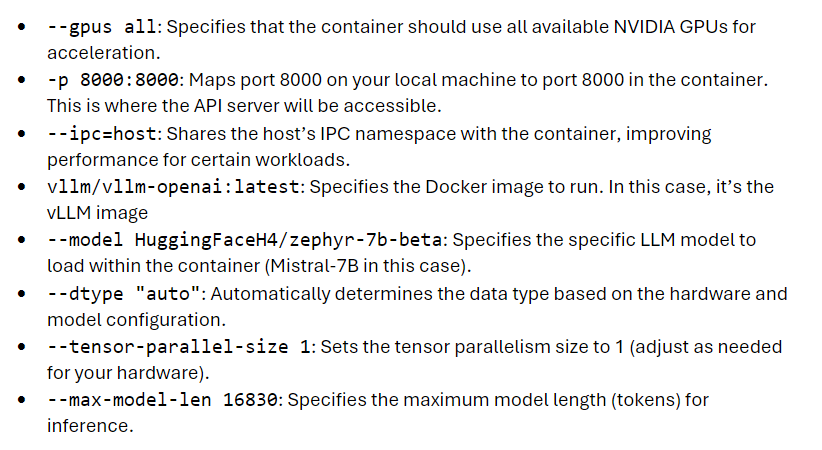

Deploying Mistral-7B with Docker

Pull and run Mistral-7B Docker Image:

Certainly! Below is the complete deployment command initializing a Docker container with the Mistral-7B model, allowing you to interact with it via an API on port 8000.

Expose the API:

Our model Exposed the API port on 8000.

Testing the Deployment

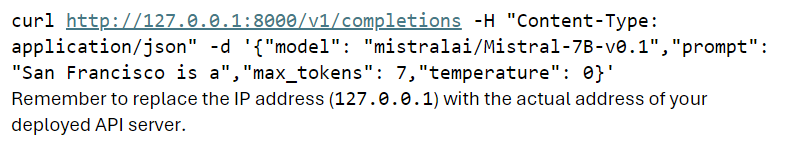

To verify that your Mistral-7B LLM deployment is functioning correctly, you can send requests to the API. Let’s use curl to test it:

- Open your terminal or command prompt.

- Run the following curl command to generate text completions based on a prompt:

Integrating vLLM into Your Workflow

- vLLM seamlessly integrates into modern workflows, enabling continuous integration and continuous deployment (CI/CD) practices. By leveraging containerization and orchestration tools like Docker and Kubernetes, you can automate the deployment and scaling of LLM models, ensuring consistent and reliable performance across different environments.

- Additionally, vLLM’s RESTful API allows for easy integration with monitoring and logging tools, enabling efficient tracking and management of LLM model deployments.

Conclusion

vLLM has become an indispensable tool in my arsenal, simplifying the deployment and management of LLM models. With its user-friendly interface, scalability, reproducibility, and cost-efficiency, vLLM empowers teams to focus on delivering value to their organizations while ensuring seamless deployment and management of these powerful AI models.

Contact Us

Get in touch

Understand how we help our clients in various situations and how we can be of service to you!